In the previous installment of our series, we delved into the democratizing power of Large Language Models (LLMs) and their potential to reshape the AI industry’s power dynamics. But, as with many transformative technologies, the generalist nature of these models means that while they have vast potential, they require specific adjustments to meet unique needs.

Open-source LLMs, powerful as they are, often come as broad-spectrum solutions. To maximize their efficiency and relevance, adapting them for particular applications is crucial. This article will navigate the waters of such adaptations, shedding light on techniques like fine-tuning, prompt engineering, and the intriguing Retrieval-Augmented Generation (RAG). Through this exploration, we aim to equip readers with the knowledge to harness the true potential of LLMs in their specific contexts.

Join us on this deep dive into the art and science of adapting open-source LLMs, setting the stage for a broader understanding and appreciation of the world of open-source AI.

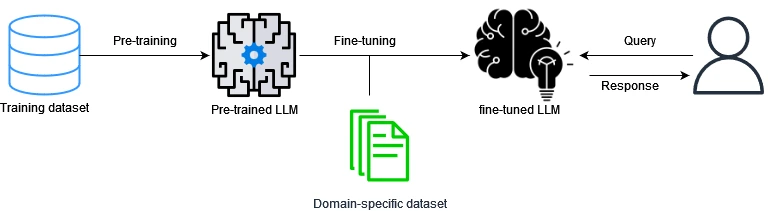

Fine-Tuning: Beyond the Foundations

In the world of LLMs, one size doesn’t fit all. That’s where fine-tuning comes into play, a process that transforms a generalized AI model into a task-specific expert. At its essence, fine-tuning can be compared to a generalist doctor undergoing specialized training to become a cardiologist or neurologist – they have the foundation, but need to tailor their expertise to the specific context.

- The Foundation: Most LLMs, when initially trained, are exposed to vast amounts of text data, allowing them to learn grammar, facts about the world, and some degree of reasoning. This broad knowledge base forms the foundation.

- Specialization Begins: To adapt this general knowledge to a specific application, the model undergoes additional training on a narrower dataset relevant to the task. For example, to develop a legal assistant bot, an LLM might be fine-tuned on legal documents and case law.

Variants of Fine-Tuning:

- Full Fine-Tuning: This is the conventional method where the entire model, regardless of its size, is trained on new data. All the parameters are adjusted to suit the target task. While effective, it can be computationally expensive and time-consuming, especially for massive models like many LLMs.

- Parameter-Efficient Fine-Tuning (PEFT): A modern technique that seeks to achieve the desired performance but with adjustments to fewer parameters. This method is particularly crucial for keeping computational costs down, especially when operating with large models. We’ll delve deeper into PEFT and its nuances in a subsequent article in this series.

Why Choose Fine-Tuning? Because it capitalizes on the knowledge a model already has (pre-trained on vast datasets) and tailors it to specific tasks. This technique significantly shortens the learning curve, ensuring that the model doesn’t start from scratch but builds upon an established foundation. Furthermore, by using variants like PEFT, businesses can achieve robust performance without incurring exorbitant computational costs.

- Tailored Solutions: Every business is unique, and fine-tuning allows AI solutions to mirror this uniqueness.

- Economical: Building an LLM from scratch is resource-intensive. Fine-tuning is a cost-effective alternative.

- Efficiency: Time is of the essence in the rapidly evolving world of AI. Fine-tuning, being faster than full-scale training, offers a timely solution.

- Leveraging Established Knowledge: Starting with a pre-trained model means you’re not starting from zero. You’re building on top of existing expertise.

Advantages of fine-tuning:

- Quick Adaptation: Speed is of the essence, and fine-tuning is significantly quicker than training a model from scratch.

- Cost-Efficiency: It’s economically wiser to adapt a pre-existing model than to build one from the ground up.

- Versatility: From customer service bots to predictive analytics tools, one foundational model can spawn myriad specialized applications.

Challenges of fine-tuning:

- Quality Matters: The output is only as good as the input. Biased or flawed fine-tuning data will yield imperfect results.

- Balance Act: There’s a risk of making the model overly specialized, potentially losing some of its general knowledge.

With an understanding of fine-tuning, businesses can navigate the AI landscape more strategically, choosing the right approach for their specific needs and challenges.

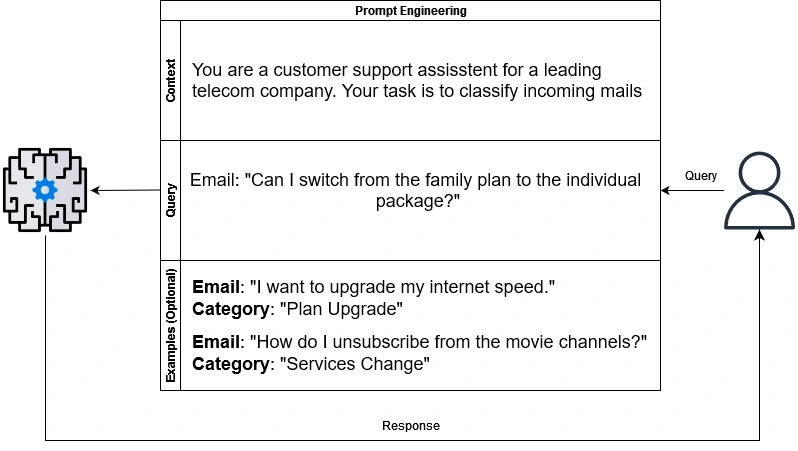

Prompt Engineering: Crafting Questions for Smarter AI

As AI’s role in our world grows, it’s not just about having a smart model, but also about knowing how to ask it the right questions. That’s where prompt engineering comes into play.

Introduction to Prompt Engineering:

While it might sound sophisticated, prompt engineering is essentially the art of curating inputs or ‘prompts’ to solicit desired outputs from a model. Think of it as asking a question in different ways to get the most accurate answer. For instance, instead of asking an LLM “What is the capital of France?”, a prompt-engineered question might be, “Which city serves as the official seat of the government in France?”

The Power of Prompts:

By restructuring how we ask, we can draw out more precise or nuanced answers from the model. This doesn’t necessarily involve retraining the model. Instead, it’s about guiding the existing model to better conclusions by framing the question more effectively. In many cases, the right prompt can be the difference between a generic answer and a nuanced, detailed one.

Challenges and Limitations:

However, prompt engineering is not without its challenges. Finding the right prompt often involves trial and error, and while one prompt may work effectively for one model, it might not be as effective for another. Additionally, over-reliance on this technique can lead to overfitting, where the model is too tailored to specific prompts and loses its generalization capability.

Why Embrace Prompt Engineering?

Despite its challenges, prompt engineering holds a special place in the world of AI adaptation. It’s a cost-effective way to extract more value from models without the need for extensive retraining. In scenarios where computational resources or data might be limited, prompt engineering offers a viable alternative to tap into the full potential of an LLM.

Advanced Strategies in Prompt Engineering

1. Zero-Shot, Few-Shot, and Many-Shot Learning:

- Zero-Shot Learning: This refers to an LLM’s ability to perform tasks it hasn’t been explicitly trained on. Suppose you’ve trained a model on a plethora of general knowledge but never specifically on identifying rare bird species. Yet, when presented with a description of a rare bird, the model might correctly identify it based on its extensive understanding and correlating it with known data.

- Few-Shot Learning: Here, the LLM is provided with a few examples to understand a new task. By showcasing how similar tasks have been approached, the model gets a ‘hint’ and is better positioned to tackle new, related tasks. Imagine you wish your model to generate recipes. You provide it with a handful of examples like “ingredient: apple, dish: pie” and then prompt it with “ingredient: chicken”. The model might then produce a chicken-based dish, having grasped the task from the few examples.

- Many-Shot Learning: As the name suggests, this involves providing the LLM with numerous examples. If you’re aiming to get summaries of technical research papers, you might feed the model dozens of examples of research papers followed by their concise summaries. When given a new paper, the model will be better equipped to generate a summary fitting the same style and brevity as the examples.

2. Chain of Thoughts Approach:

Sometimes, a single prompt isn’t enough. The ‘Chain of Thoughts’ approach involves creating a series of prompts, where each subsequent query builds on the previous one. This can be likened to a conversation where information is progressively layered, enabling the extraction of intricate details or facilitating a more in-depth exploration of a topic. Consider seeking a detailed explanation of a complex topic like quantum entanglement. The initial prompt could be a basic query, “What is quantum entanglement?” Based on the model’s response, a follow-up might delve deeper, asking, “How does it correlate with quantum superposition?” This sequential prompting can lead to a richer, multi-faceted understanding.

Harnessing Advanced Prompting Techniques:

Incorporating these advanced strategies can be a game-changer. They offer a dynamic approach to harnessing the prowess of LLMs, ensuring a more holistic, nuanced response from the model. It becomes especially crucial in scenarios where fine-tuning might not be feasible or where a more explorative, organic interaction with the model is desired.

Advantages of Prompt Engineering:

- Flexibility: Prompting offers a dynamic approach to obtaining desired outputs from models without the need for retraining. This is particularly useful when the user requires varied responses based on different scenarios.

- Rapid Adaptation: With few-shot or many-shot learning, models can adapt to new tasks quickly, making it easier to modify behavior without a comprehensive fine-tuning process.

- Resource Efficiency: Compared to retraining models, prompt engineering is often more computationally efficient. It allows for quick tweaks and changes without expending extensive resources.

- Iterative Learning: The Chain of Thoughts approach facilitates deeper understanding by iteratively breaking down complex questions into simpler, sequential queries, helping the model arrive at a nuanced answer.

Challenges of Prompt Engineering:

- Consistency: Achieving consistent outputs for varying inputs can be challenging. The same prompt can sometimes yield different answers based on slight alterations in the input phrasing.

- Scalability: While prompting works well for individual tasks or queries, scaling it up for large datasets or comprehensive tasks can be a challenge, especially when a consistent output is required across all prompts.

- Expertise Required: Crafting effective prompts, especially for advanced strategies like Chain of Thoughts, demands a deep understanding of the model and the task at hand.

- Over-reliance: Solely relying on prompting might lead to missing out on the potential benefits of other techniques like fine-tuning, especially if the task requires extensive modifications in model behavior.

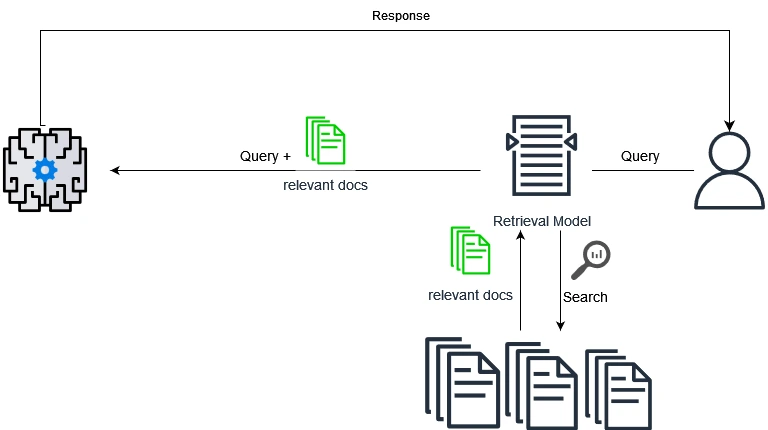

Retrieval-Augmented Generation (RAG)

Introduction to RAG:

Retrieval-Augmented Generation (RAG) combines the best of both retrieval-based and generative methods to answer questions. At its core, RAG utilizes external knowledge sources, often large-scale databases or corpora, to assist in generating a response. When posed a question, the model first retrieves relevant document passages from its database and then formulates a coherent and contextually relevant answer using these passages.

How RAG Works:

- Retrieval Phase: When a query is presented, RAG first identifies relevant passages or documents from a large-scale corpus that could provide context or insights to answer the query.

- Generation Phase: Using the retrieved documents as context, the model then generates a coherent answer. This process ensures that the responses are not just based on the model’s pre-trained knowledge but also on the most recent and relevant data from the external sources.

Examples:

- Fact-Based Questions: If someone asks, “What are the primary causes of the Northern Lights?”, RAG might retrieve scientific articles or trusted databases on atmospheric phenomena to generate a detailed and accurate response.

- Event Updates: For questions like, “What were the outcomes of the recent climate change conference?”, RAG could pull information from recent news articles or official conference summaries to provide a current answer.

Advantages of RAG:

- Data-driven Responses: RAG’s approach ensures that answers are not only based on the model’s pre-trained data but also on real-time, updated, and contextually relevant information.

- Balanced Approach: By combining retrieval and generation, RAG strikes a balance between accuracy (by retrieving factual data) and fluency (by generating coherent responses).

- Scalability: With an external database, the system can be continually updated with new information, ensuring that the model remains relevant over time without the need for constant retraining.

Challenges of RAG:

- Dependency on External Data: The accuracy and relevance of the model’s responses heavily depend on the quality of the external database.

- Complexity: Implementing RAG requires managing both retrieval and generation processes, which can increase the complexity of the system.

- Latency: The two-step process, especially when accessing large databases, might introduce latency in generating responses, which could be problematic for real-time applications.

Choosing the Right Technique for Your Needs

While we’ve explored various techniques to adapt open-source LLMs, the question that often arises is: “Which technique is right for my project?” The answer largely depends on your specific requirements:

- Fine-Tuning: Best suited for projects that have a specific dataset available and aim for a tailored model performance. This method is especially beneficial if the out-of-the-box performance of a pretrained LLM isn’t satisfactory for the task.

- Prompt Engineering: A good choice for tasks that require a more general approach, especially when the available data for training is scarce. If you’re looking to get results quickly without the overhead of model training, prompting can be a powerful tool.

- Retrieval-Augmented Generation (RAG): Optimal for projects that need a combination of knowledge retrieval and generation. If your application involves sourcing information from large datasets or documents and then generating a cohesive response, RAG is your go-to technique.

However, the above is just a basic guideline. The specifics of your project, data availability, computational resources, and desired outcomes play a significant role in the decision-making process. Stay tuned for our upcoming articles, where we’ll delve deeper into the intricacies of each method, helping you make even more informed choices.

Wrapping Up

As the AI landscape constantly evolves, the techniques we employ to leverage the power of Large Language Models must evolve with it. Fine-tuning, prompt engineering, and RAG each offer unique advantages that cater to specific challenges and goals. Whether it’s the precise calibration of a model to specific tasks through fine-tuning, the versatility and adaptability offered by prompt engineering, or the dynamism and real-world applicability achieved through RAG, these techniques signify the next frontier in LLM adaptation.

Moreover, as open-source LLMs gain traction, understanding these techniques becomes vital for businesses and developers aiming to ride the wave of AI democratization. It’s not just about accessing powerful models; it’s about tailoring them effectively to real-world applications.

In the coming articles, we will delve deeper into the nuances of these techniques, their efficiencies, and their sustainability impacts. The age of accessible AI is here, and the tools to harness its potential are right at our fingertips. Join us in this journey as we explore, adapt, and innovate.