MIT NANDA Project confirms in it’s “The GenAI Divide – State of AI in Business 2025” report what enterprise teams have felt for months: adoption is high, transformation is rare. The problem isn’t model quality — it’s a structural learning gap. Knowledge gets trapped in tickets, tribal expertise, and fractured state; models trained on that chaos are brittle. That diagnosis maps exactly to our Operational Knowledge Decay theory. The remedy is architectural: extract, structure, and operationalize that buried history. We call it the Knowledge Refinery.

What MIT NANDA actually found

Project NANDA reviewed hundreds of implementations and interviewed enterprise leaders. The headline: most custom enterprise GenAI pilots don’t deliver measurable P&L impact. The report isolates a clear cause — not governance, not compute, not talent — but systems that don’t learn: they fail to retain context, they can’t improve from feedback, and they don’t integrate into real workflows.

Two facts matter here:

-

Consumer tools (ChatGPT, Copilot) are widely used by individuals because they’re flexible and immediate — but they don’t translate to mission-critical, long-running workflows without memory and customization.

-

The pilot → production dropoff for task-specific systems is dramatic. Project NANDA shows very few pilots scale because the systems are built on fractured, ephemeral context.

In short: there’s demand and capability, but the foundation is broken. That’s Operational Knowledge Decay.

“The core barrier to scaling is not infrastructure, regulation, or talent. It is learning.”

MIT Project NANDA

MIT Project NANDA

Why Operational Knowledge Decay is the real failure mode

Operational Knowledge Decay describes how valuable institutional knowledge — problem histories, resolutions, tacit fixes — becomes effectively invisible inside the tools teams use every day: ticketing systems, maintenance logs, SOP notes. When you wrap a model around that graveyard, the bot simply amplifies the absence of truth.

Three predictable failure modes:

-

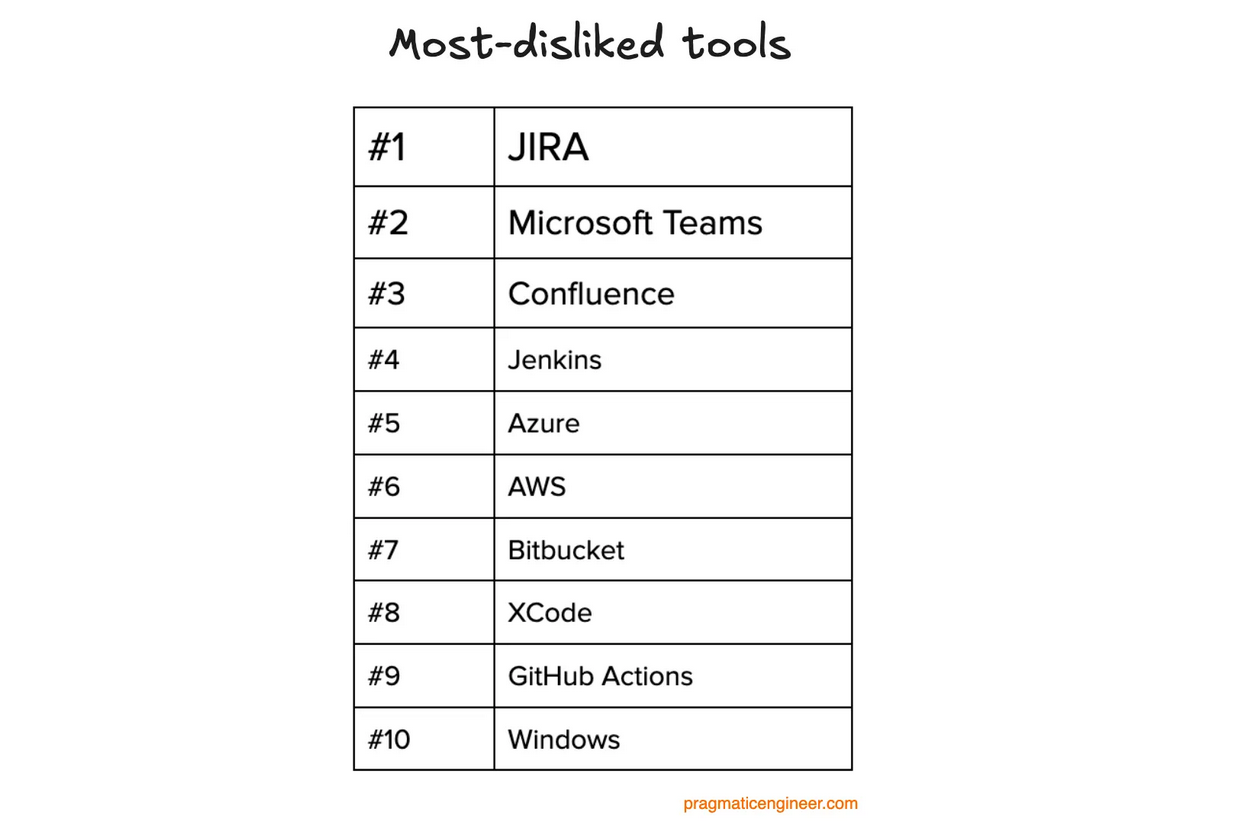

Expert bottlenecks: senior engineers keep solutions in their heads; no persistent encoding.

-

Institutional amnesia: repeated re-solving of identical problems because prior fixes are buried.

-

Brittle automation: AI produces confident but incorrect actions because required context never made it into datasets.

These are the exact frictions Project NANDA flags as the learning gap. The report doesn’t say “models are bad.” It says the data + process substrate is broken — which is a solvable engineering problem.

How the Knowledge Refinery (CAA) closes the gap — practical mapping

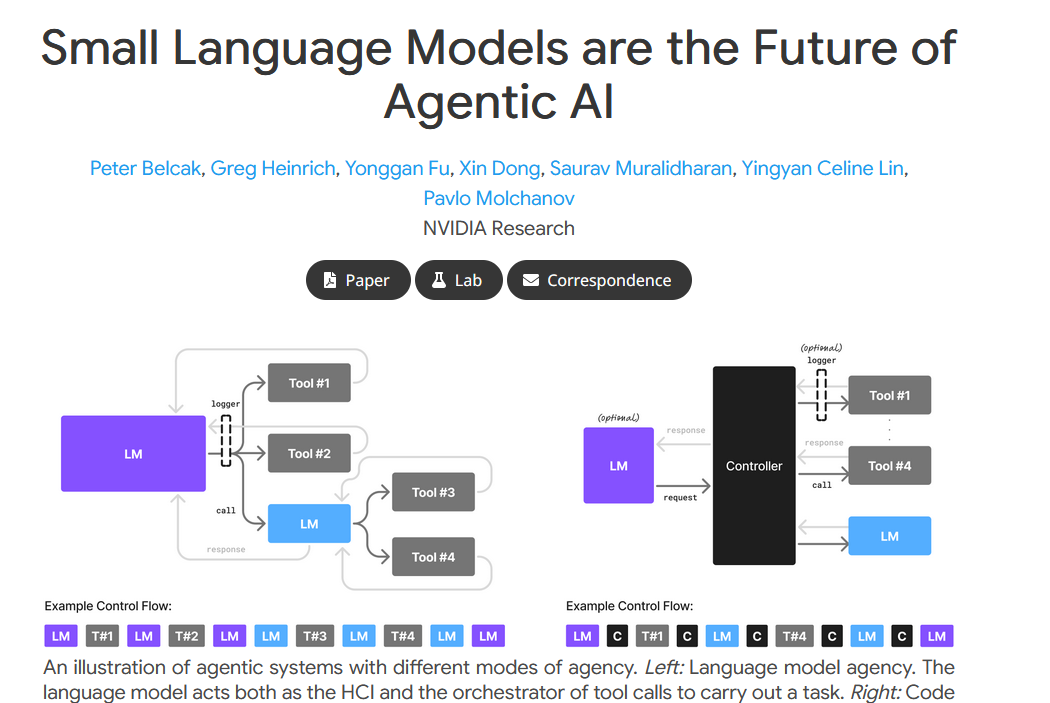

You don’t fix this with a bigger model or a prettier UI. You fix it with architecture that produces persistent context and measurable learning. Our Cognitive Agentic Architecture (CAA) implements that in four practical ways:

-

Structured Context & State Layer — convert noisy records into canonical state objects (explicit state beats free-text every time). That state is versioned and queryable.

-

Persistent Memory + Feedback Loops — ingest outcomes and corrections so agents learn from each interaction rather than restarting every session.

-

Deterministic Control + Observability — explicit control flows and full traceability so ops teams can audit decisions and identify gaps.

-

Sovereign Stack & Data Boundaries — enterprise-grade hosting and connectors to keep data private and auditable.

Map this to MIT’s requirements: learning capability (memory + feedback), workflow fit (deep connectors + state), and measurable outcomes (instrumentation + KPIs). In our pilots, adding explicit state and feedback reduced repeat incidents by up to 30% and cut cycle time by measurable margins — anonymized results that prove memory matters.

-

Context matters more than model size. Consumer LLMs prove utility for ad-hoc work; production requires persistent memory, feedback loops, and integration.

-

The learning gap is our map. MIT shows the failure modes — brittle workflows, no context retention, and poor UX — that lock most pilots into POC purgatory.

-

This is a selective opportunity. Like a gold rush, the raw resource is abundant but extractable value requires process, tooling, and discipline.

What buyers and vendors should do now

If you buy: insist on pilots benchmarked by operational KPIs, not demo slides. Require a clear instrumentation plan and a path for the vendor to learn from your data. Prefer co-development pilots with tight accountability.

If you build: start narrow. Pick a high-volume, well-instrumented process, and ship memory + feedback first. Don’t optimize UX until the model consistently improves from real interactions.

If you invest: favor teams who deliver immediate operational ROI and have channel/referral routes into procurement. The switching costs for learning-capable systems will compound quickly.

Our Offer

MIT NANDA’s report diagnosed the disease. Our ‘Friction Audit Lite’ is the first dose of the cure.

It is a 2-week, data-driven diagnostic that will give you a ranked shortlist of your highest-ROI automation opportunities and a concrete blueprint for your first successful ‘Second Wave’ pilot.

Stop theorizing. Start building on a foundation of evidence.

- Launch Your Friction Audit Lite (€8,000) – 2-week delivery · refundable credit toward Project Foundation if you convert within 30 days.

- Learn More About the Audit Process

BSFZ-certified R&D • MIT Project NANDA validated diagnosis • anonymized pilot outcomes available on request.