The Pragmatic Engineer survey, their largest developer survey with ≈3,000 engineers, doesn’t just list tool likes and dislikes. It exposes the real failure of first wave AI tooling: fragmented, decaying institutional knowledge. JIRA isn’t the root problem; it’s the symptom of Operational Knowledge Decay, the collapsing infrastructure that turns every workflow into friction.

The Real Failure Isn’t AI. It’s Operational Knowledge Decay.

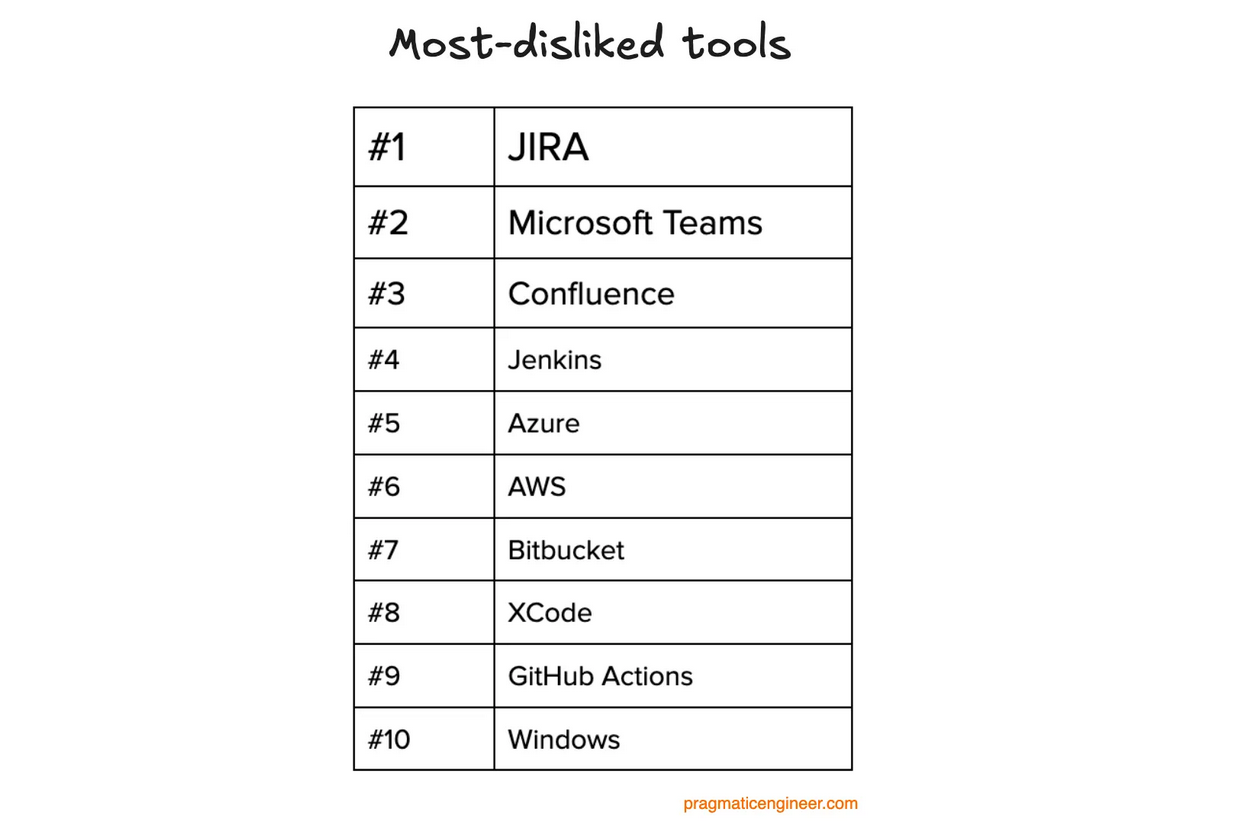

The survey’s most predictable and visceral finding is that JIRA is, by far, the most-disliked tool in software development.

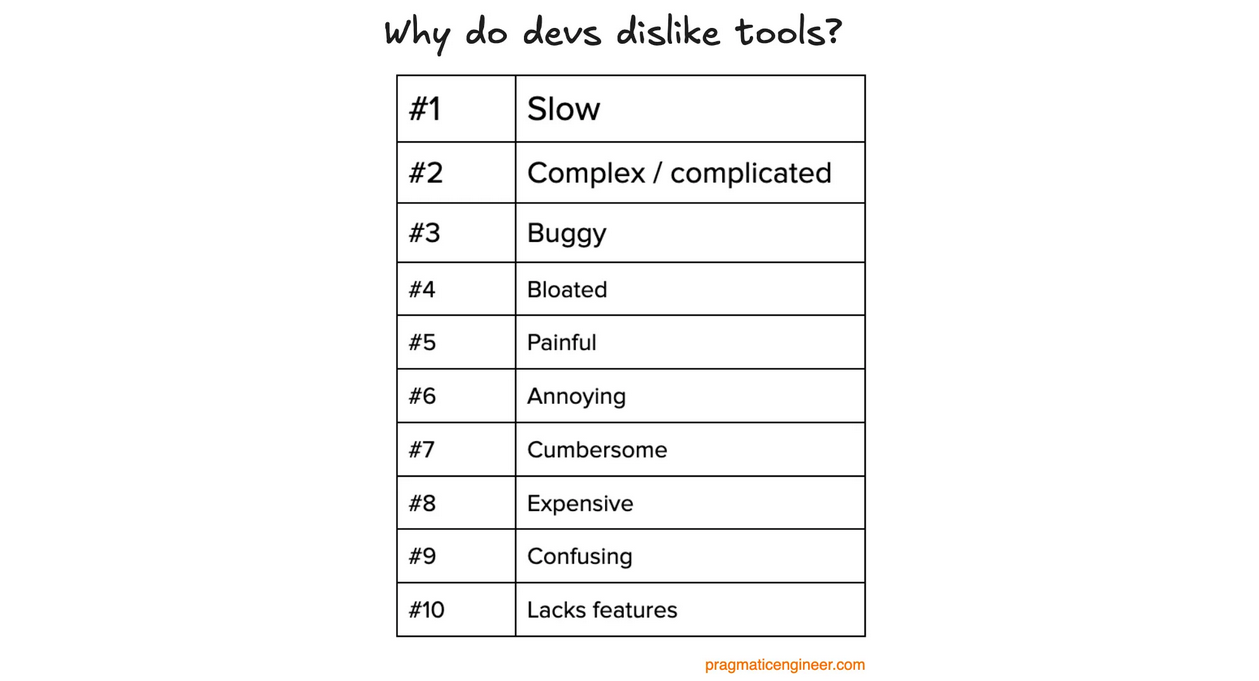

The common mistake is to blame the tool. But the survey’s own data on why developers dislike these tools—”Slowness,” “Complicated,” “Hard to find information”—reveals a deeper truth.

This isn’t an interface problem. It is a symptom of a systemic disease we call Operational Knowledge Decay.

Let’s connect the dots between the symptom and the disease:

-

Why does Jira feel “slow”? Not because of the hardware. It’s slow because every ticket forces a human to manually hunt for context in a vast “data graveyard” of past issues. The tool feels slow because the knowledge retrieval process is fundamentally broken.

-

Why is Confluence “hard to find information in”? Because your institutional knowledge is fragmenting, rotting, and disappearing under years of outdated, unstructured pages. The tool isn’t the problem; the lack of a living, structured knowledge asset is the problem.

-

Why do these systems feel “buggy”? Because the workflow itself is broken. When a ticket gets passed between six people (“ticket ping-pong”) because the answer is trapped in an expert’s head or a five-year-old ticket, the system feels broken. The friction is not in the code; it is in the failed knowledge transfer.

The hatred for these tools is the market screaming that a piece of its critical infrastructure is failing. It’s the pain of managing 21st-century complexity with 20th-century systems of record.

This is not a productivity hiccup. It is a failure of your most critical infrastructure: your knowledge.

The First Wave Broke on the Rocks of Reliability

Generative AI arrived promising magic—autocomplete, code generation, instant answers. Tools like GitHub Copilot are widely adopted, but the survey makes clear why adoption hasn’t translated into trust: engineers love the idea of acceleration and hate the reality of interruptions, incorrect suggestions, and brittle hallucinations. The market has moved past awe; it’s now demanding predictability, verifiability, and control. AI that “mostly works” is now a liability in production contexts.

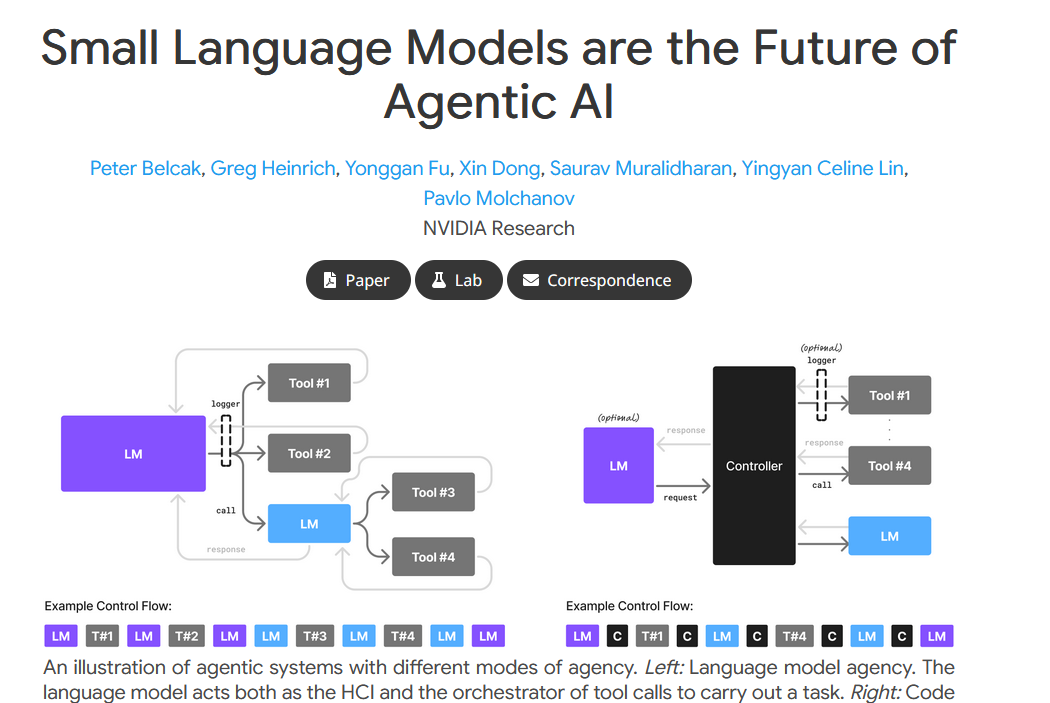

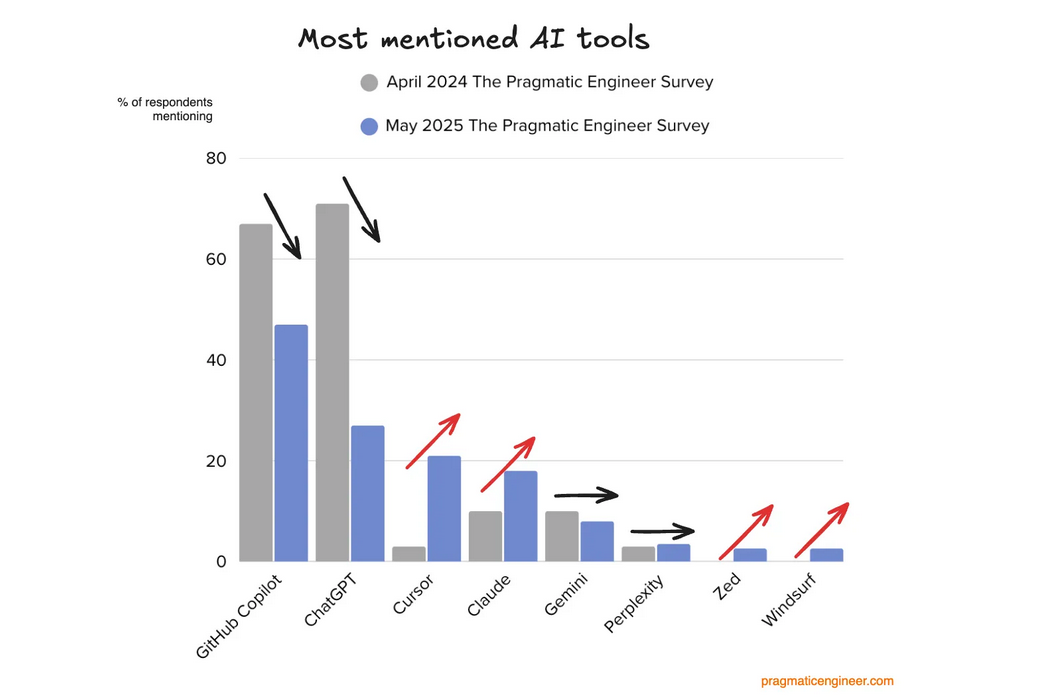

The data shows a clear shift. While initial adoption of 'First Wave' AI tools was explosive, we are now seeing the beginning of a market correction. Engineers are moving past the magic and starting to demand reliability and control. The market is searching for a 'Second Wave' of enterprise-ready solutions.

The chart shows the First Wave plateauing while specialists that prioritize reliability and integration are accelerating.

-

Engineers are moving from ChatGPT to Claude because they are seeking more specialized and nuanced models for complex tasks.

-

They are moving from a generic browser interface to Cursor, an AI-native code editor, because they need tools that are deeply integrated into their actual workflow.

-

They are moving from generic search to Perplexity, a ‘knowledge engine,’ because they need verifiable, citation-backed answers, not just plausible-sounding text.

This is not a change in preference. It’s a flight to quality.

The market is maturing, in real time, from a demand for ‘AI Magic’ to a demand for AI Reliability, Integration, and Verifiability. These are the non-negotiable pillars of our Cognitive Agentic Architecture and the core principles of the Second Wave.

What the Survey Validates: Three Second-Wave Principles

-

Reliability Over Magic.

The industry doesn’t need flashier generation; it needs systems that deliver consistent, correct signals without manual babysitting. -

Knowledge as Infrastructure.

Documentation and context aren’t optional extras. They are the substrate that determines whether AI tooling amplifies leverage or amplifies entropy. -

Integration, Not Replacement.

Engineers don’t want wholesale ripouts. The next wave must sit on top of existing stacks—enhancing JIRA, Confluence, Slack, etc.—and extract usable, structured intelligence from their chaos.

Our Prescription: The Knowledge Refinery

The survey didn’t just confirm the problem. It put a price tag on inertia. The failed cure of the first wave was slapping generic AI interfaces over decayed datasets—faster ways to serve wrong answers. The real solution is to refine the raw operational signal into a structured, validated asset before any automation or recommendations are built on top.

We don’t replace JIRA. We don’t chase illusions of “smarter chatbots.” We build an intelligence layer that:

- Ingests fragmented inputs where knowledge is created, not after the fact.

-

Structures and surfaces context so answers are traceable and trustable.

-

Validates truth through usage feedback, arresting decay instead of amplifying it.

-

Feeds dependable signal downstream, turning knowledge into operational leverage.

The Strategic Gap Most Companies Ignore

The winners over the next decade will stop treating these symptoms as separate problems. They will stop “fixing tools” and start managing knowledge as a core strategic asset. That means: measuring where decay happens, plugging the leaks, and building systems that capture truth as it’s created—not retrospectively guessing at it later.

Conclusion

The Second Wave of AI tooling isn’t about more generation; it’s about making what you already have dependable and scalable. The Pragmatic Engineer survey just confirmed the leverage point: fix knowledge decay first, and reliable automation follows. If you’re responsible for scaling software teams, audit your knowledge flow today: where does decay happen, what layers consume that signal, and what AI you’ve bolted on is amplifying entropy. Fix the decay before you build automation—anything else is fragile by design.

Read the full survey to map where your tool stack exposes decay. Then compare that to how your current AI/automation layers consume and trust knowledge—anything built on unrefined signal is fragile by design