NVIDIA’s new paper validates a core CAA idea: use small, specialist models inside a deterministic controller. Here’s what that means for pilots, procurement, and how our Friction Audit finds the right SLMs for Agentic AI candidates in your stack.

NVIDIA’s recent paper argues what we’ve been building: SLMs for agentic AI — small specialist models orchestrated by deterministic controllers outperform monolithic LLM-only designs for routine, high-volume tasks. This post explains why SLMs and modular agents cut cost, latency and risk, how that maps to our Cognitive Agentic Architecture (CAA), and how the Friction Audit finds the best SLM candidates in your operations.

What NVIDIA found—SLMs for Agentic AI boiled down

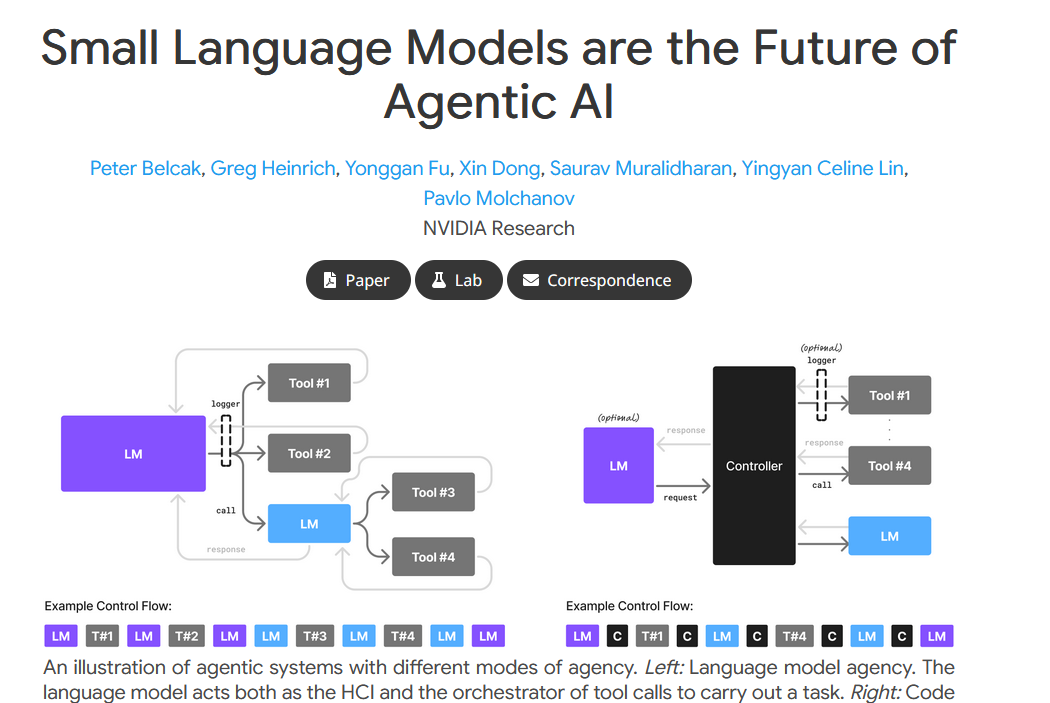

The paper’s core claim: many agent invocations are narrow, repetitive, and low-variance — perfect for compact, fine-tuned models. They call out two modes of agency: (1) language-model agency where the model both reasons and orchestrates, and (2) code agency where a dedicated controller (code) orchestrates tool calls while models supply narrow expertise. The economics and latency benefits of SLMs are clear: cheaper inference, lower latency, on-prem or edge deployment, and faster iteration via minifinetuning. For production agents this reduces cost and operational risk while preserving quality where it matters.

Code Agency → our CAA (SLM vs LLM in agentic AI)

NVIDIA’s “Code Agency” diagram is basically a one-line validation of CAA: separate orchestration from reasoning. In CAA the deterministic controller is the canonical state machine — it routes events, enforces contracts, and invokes specialist models as tools. NVIDIA independently converges on this split; that convergence strengthens the engineering case for our Intelligence Layer and Knowledge Refinery. (We mapped similar patterns from Netflix, Confluent, Trade Republic and other teams in prior posts.)

Recommendations → our product thesis (SLMs, modular agents, PEFT)

NVIDIA’s practical recommendations map directly to what we sell:

-

Prioritize SLMs for cost-effective deployment.

Our Sovereign Stack is built to run efficient open models for routine tasks — lower latency, lower infra cost, fewer vendor lock-in risks. -

Design modular, heterogeneous agents.

CAA = Mixture-of-Experts in production: specialist agents handle deterministic, repeatable work; the controller enforces contracts and hands off to larger models for edge reasoning. -

Leverage rapid specialization (PEFT).

Fine-tuning compact models for a domain is cheap and fast. We use PEFT techniques in pilots to reach high accuracy with minimal compute overhead.

Commercial implications for industrial buyers

For industrial operations, these are not academic tweaks — they change procurement math. SLMs mean you can run inference on-prem or in constrained cloud environments, hitting sub-second SLAs for operator assistance and preserving data boundaries. They cut TCO for long-running pilots and make it easier to justify production rollouts: rather than buying heavy, monolithic model contracts, buyers can budget for modular SLM nodes and a deterministic control plane. For vendors and system integrators, SLM-first pilots lower entry friction: cheaper PoCs, faster time-to-value, and an easier path to the Kafka+Flink learning stack when the signal justifies it.

How this changes the Friction Audit

Our the Friction Audit includes an SLM-readiness check. In two weeks we deliver: (a) a ranked shortlist of SLM-candidate tasks, (b) exportable event + outcome samples for training, and (c) a migration prescription — either a low-cost SLM pilot or the Kafka+Flink roadmap for learning-first scale.

SLM-readiness signals we check (copyable thresholds)

-

Repetitive-ticket share ≥25–33%: strong candidate for SLM automation.

-

Category with ≥100 labeled examples: candidate for an SLM classifier (empirical accuracy ~95%+).

-

Latency SLA ≤500–1000 ms or on-prem requirement: SLM likely required.

-

Low variance in decision logic (deterministic rules apply): SLM + controller is ideal.

If your process hits two or more signals, a low-cost SLM pilot is usually the fastest path to measurable ROI.

Caveats & when LLMs still matter

SLMs aren’t a universal replacement. Use LLMs where context is open-ended, the task requires deep multi-step reasoning, multimodal inputs, or when novelty/creativity is essential. The right architecture is heterogeneous: small models for routine work, larger models for exceptions — all coordinated through the controller that CAA prescribes.

Our Offer: Friction Audit Lite

If you want to know which parts of your operations are SLM candidates and which need a full learning stack, run the Friction Audit Lite: a 2-week, data-driven diagnostic that returns a ranked SLM/learning roadmap and exportable labels. Launch it here:

- Launch Your Friction Audit Lite (€8,000) – 2-week delivery · refundable credit toward Project Foundation if you convert within 30 days.

- Learn More About the Audit Process

BSFZ-certified R&D • MIT Project NANDA validated diagnosis • NVIDIA Research validated architecture • anonymized pilot outcomes available on request.